When we try to weed using autonomous robot, what to be considered is how to avoid obstacles. The strategy is categorized as following two groups.

- Obstacles are identified and avoided in advance by IR sensor、ultrasonic sensor、ToF sensor、camera(CMOS sensor)(Active obstacle avoidance)

- Once the robot hit obstacles then avoid it by accelerometer, pressure sensor, touch sensor.(Passive obstacle avoidance)

In the current case, robot has camera, hall effect sensor at foot and accelerometer.

In the current development phase, camera is only used to recognize target weed and isn’t used to avoid obstacles. This is because basic gait pattern of crab robot is side walk and the forward direction is normal to optical axis of camera. We can’t get appropriate information about image along with forward direction on time.

Currently used camera has 160 degrees field view. But it’s not sufficient to capture forward direction. If we want to capture forward direction, we need to select one of options, that is, to make camera rotationable, add a new camera and robot turns 90 degrees around at the current location. These approaches lead to complex mechanical structure or gait pattern.

Thus I chose passive obstacle avoidance approach. The process how to avoid obstacles is described in the following list.

- Signals (X,Y,Z) from accelerometer connected to Arduino Nano Every is gathered by constant time interval. In the current version, there is no gyro sensor on the robot because it has 6 legs so that rarely gets stucked with unstable posture. However there is a possibility foot tip gets stucked with pit fall and posture suddenly changes. In order to avoid this, we can utilize signal from hall effect sensor attached at foot tip. I’ll describe how to utilize it in another post.

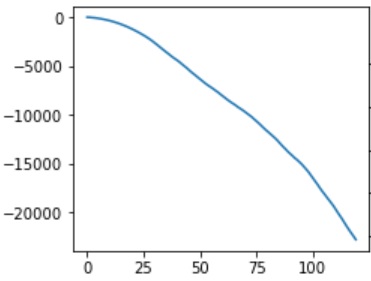

- It should be prepared for signal from accelerometer when there is no obstacle in advance according to gait pattern (forward, backward, turn left and turn right). The following chart represents displacement of X direction in the case of foward gait pattern. This chart shows monotonic decrease which means there is no obstacle. We can get dispacement information by taking cumulative sum of acceleration twice.

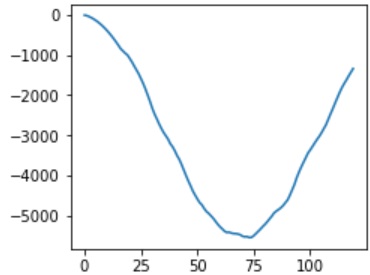

- Next chart represents displacement of X direction when there is a obstacle. We can easily see the gradient has been changed around at 75 steps.

Compare two signals, that is, signal in the case there is no obstacle and that there is obstacle. Then if the difference between both of them exceeds pre-defined threshold, gait pattern should be changed from forward to backward and turn left/right. Rotational direction is decided randomly. For the value of threshold to trigger to change gait pattern, I’ll try to get signal from accelerometer some times and decide huristically. If threshold value is small, many events will occur when the robot hits obstacle and goes back and turn repeatedly. On the contrary, threshold value is large, the robot tries to go forward repeatedly even if it can’t go ahead. We need many trials to detect appropriate threshold value. The development of learning model is next target.